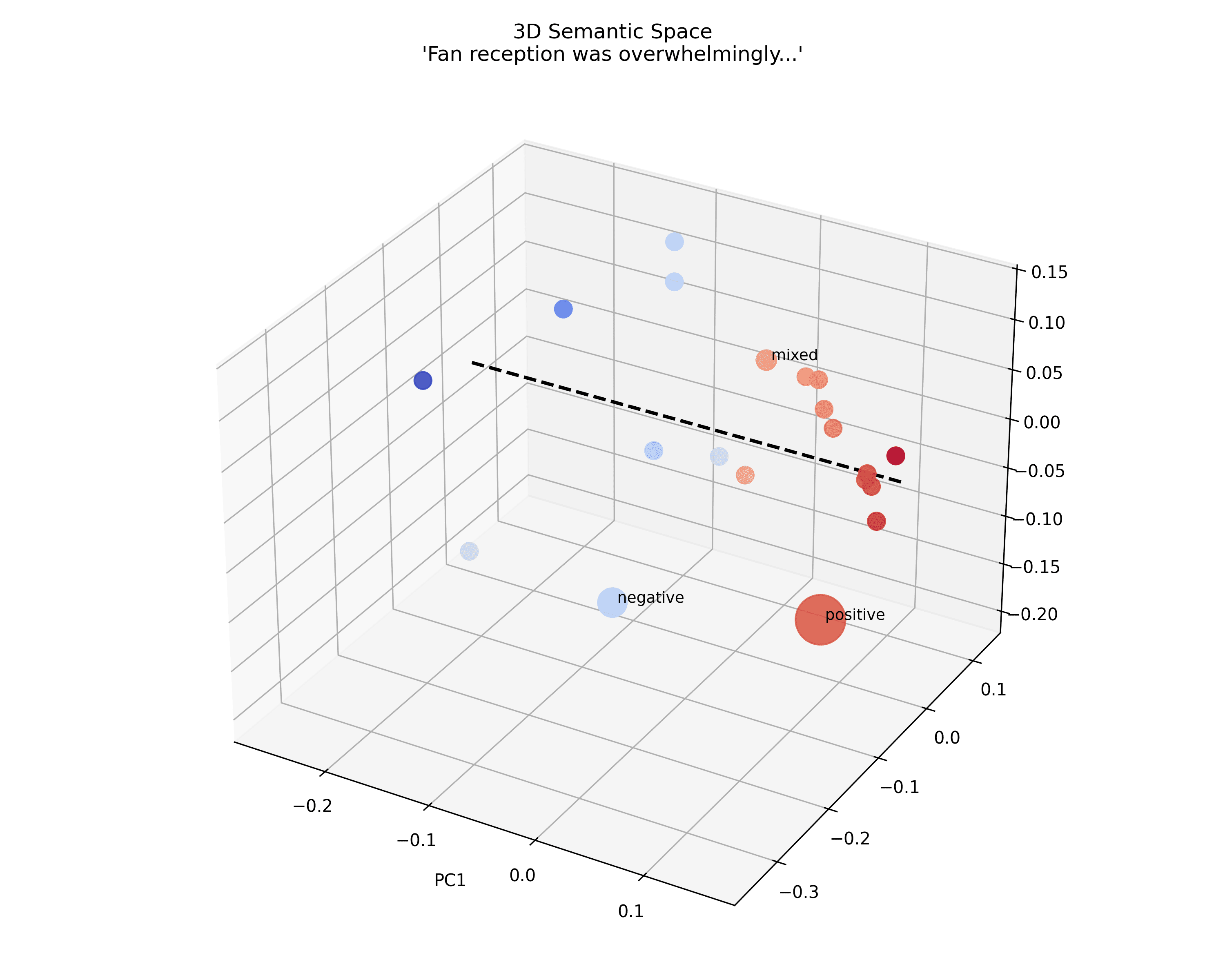

Researchllmtemperature scalinghallucinationsinference

Authors Apply Temperature Semantically To Reduce LLM Hallucinations

5.7

Relevance Score

A LessWrong post proposes semantically applying temperature during LLM inference to minimise low-temperature hallucinations, and questions whether 0-temperature inference should denote highest confidence or deterministic output; it invites reconsideration of inference semantics and sampling practices for model reliability.