I. Introduction

A brief overview of the article

Welcome! In this article, we are going to delve deep into the world of Label Encoding, an important technique used in Feature Engineering. This method plays a vital role in converting categorical data into numerical data, which makes it easier for machine learning models to understand and process the information. We will explain every aspect of Label Encoding, starting from its basic concept to the mathematical theory behind it, and even its practical implementation. Our aim is to make this topic simple and easy to understand, so whether you’re a seasoned data scientist or a curious beginner, you’ll find something useful here.

Importance of categorical data handling

But first, why should we care about categorical data? Categorical data is a type of data that can be divided into multiple categories but has no order or priority. Examples include the color of a car (red, blue, green), the type of music track (rock, pop, jazz), and so on. In its raw form, this data is difficult for a machine-learning model to understand and use. This is where techniques like Label Encoding come in.

Categorical data, such as names, labels, or other types of identifiers, are quite common in the data we encounter in our daily lives. As human beings, we can easily understand this type of data because our brains are trained to infer context and meaning from it. However, for a computer, understanding categorical data is not as straightforward. A computer algorithm works best with numbers, not words or labels. This is where Label Encoding becomes crucial.

In the world of machine learning and data science, Label Encoding is like a translator, converting human language into a language that algorithms and models can understand and process. This allows us to feed our machine learning models with data in a format they can interpret, leading to more accurate results. Let’s start our journey and learn all about Label Encoding, shall we?

II. Understanding Label Encoding

Definition of Label Encoding

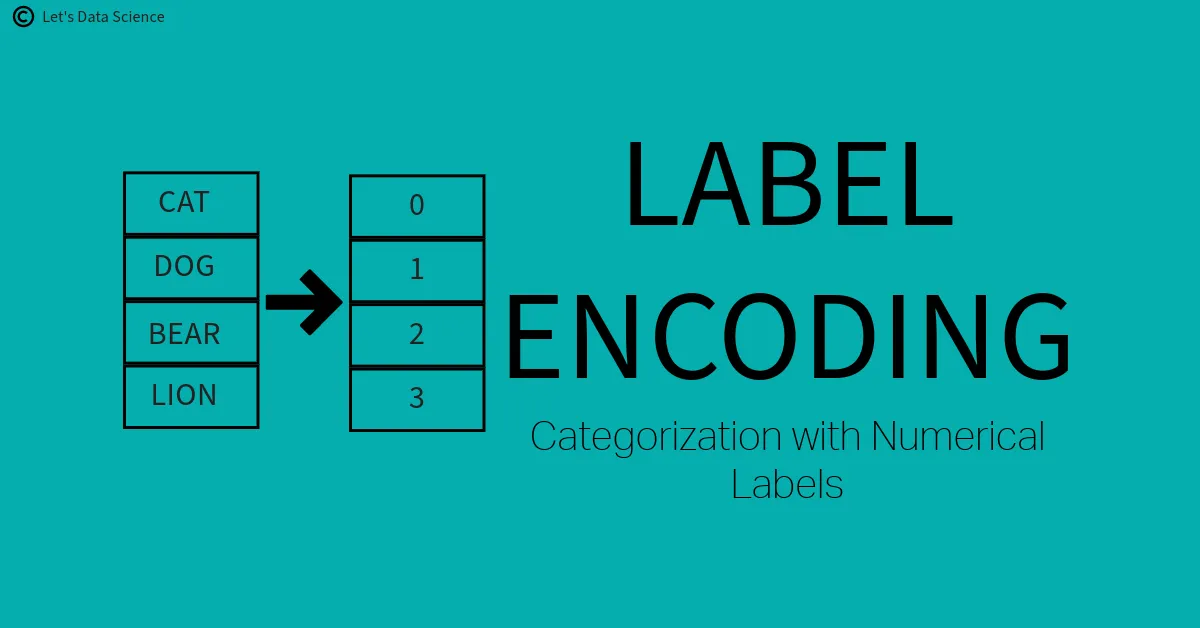

Label Encoding is a popular method used in machine learning to turn categories into numbers. This sounds a bit weird, right? Well, let’s break it down in simple terms.

Imagine you have a box of different kinds of fruits: apples, bananas, cherries, and so on. Now, your job is to put a unique number on each kind of fruit. So you label all the apples as 1, bananas as 2, cherries as 3, and so forth. This way, every type of fruit now has a unique number. This is basically what Label Encoding does!

In technical terms, Label Encoding is a process of converting the labels into a numerical format to convert them into a machine-readable form. Machine learning algorithms then use this numeric data to produce accurate results.

How Label Encoding Works

Let’s say you have a list of five different cities: New York, London, Tokyo, New Delhi, and Sydney. Now, a machine learning model doesn’t understand words like ‘New York’ or ‘Tokyo’. So, we use Label Encoding to give each city a unique number. We could assign New York as 1, London as 2, Tokyo as 3, New Delhi as 4, and Sydney as 5. Now, our machine learning model can work with this data because it’s in a numerical format!

But, it’s important to remember that the numbers we assign don’t have any meaning or order. The number ‘5’ for Sydney doesn’t mean that Sydney is more or less than New York, which is ‘1’. The numbers are just unique labels. So, while the number ‘5’ might be more than ‘1’ in maths, in Label Encoding, it’s just a different label.

Importance of Label Encoding in Feature Engineering

Label Encoding is like a bridge that helps our machine-learning model to understand the data. Without Label Encoding, our model might get confused and make mistakes. For example, if we didn’t convert our cities into numbers, our model might mix up ‘New York’ and ‘Tokyo’. By using Label Encoding, we ensure that our model treats each category uniquely, leading to better learning and predictions.

Role of Label Encoding in Machine Learning Models

In machine learning, our aim is to teach a computer model to make accurate predictions based on data. Label Encoding is an essential step in this learning process. By converting categories into numbers, Label Encoding helps the model understand the data better. This results in a model that can learn more accurately from the data and make better predictions.

Comparison with numerical data processing

Numerical data is different from categorical data. It’s already in a format that a machine-learning model can understand. This means we don’t need to use Label Encoding for numerical data. But, if we have categorical data, Label Encoding is a vital step to transform this data into a format our model can work with. So, while both numerical and categorical data are important for machine learning, they require different processing methods.

In short, Label Encoding is a powerful technique that helps us to prepare our data for machine learning models. Without it, our models might get confused and make mistakes. By using Label Encoding, we ensure that our models can understand the data, learn from it, and make accurate predictions. And, the best part is, it’s a simple process that anyone can learn!

III. Mathematical Theory Behind Label Encoding

Mathematical foundation and logic

The concept of Label Encoding is simple and elegant. It’s all about matching pairs – specifically, pairing up categories with numbers. But how does that work in a mathematical sense? Let’s break it down.

In maths, we love to work with numbers. Numbers are predictable, and we have a whole arsenal of mathematical tools that we can use to manipulate and understand them. That’s why we want to turn our categories into numbers.

Imagine we have a set of categories C, where C = {cat1, cat2, cat3...}. These could be anything: names of cities, types of fruits, different car models – you name it!

What we want to do is map these categories to a set of numbers N, where N = {1, 2, 3...}. In other words, we want to create a function f so that for every category c in C, there is a unique number n in N. This function f is defined as f(c) = n. That’s our goal.

The simplest way to do this is to assign each category a unique number. We could assign cat1 the number 1, cat2 the number 2, and so on. It’s just like playing a game of matching pairs: for each category, we find its matching number.

But remember, these numbers don’t have any inherent meaning. They are just labels. The number 3 isn’t greater or smaller than 2 – it’s just a different label.

How Label Encoding affects the dimensionality of data

One of the great things about Label Encoding is that it doesn’t change the dimensionality of our data. That means if we start with ten categories, we’ll end up with ten numbers. No more, no less. We’ve simply transformed our categories into a different format.

However, it’s crucial to remember that Label Encoding is a technique best suited for nominal categorical variables. Nominal variables are categories that have no order or priority. If our categories have a particular order or hierarchy, other techniques like Ordinal Encoding would be more appropriate.

In a nutshell, the maths behind Label Encoding is all about creating a unique matching pair of categories and numbers. It’s a simple but powerful concept that allows us to transform our data into a format that’s perfect for machine learning.

And, despite the fancy-sounding name, it’s actually quite simple, isn’t it? Even a kid can understand the idea of matching pairs! So, don’t be intimidated by the jargon. If you can understand matching pairs, you can understand Label Encoding.

IV. Techniques for Label Encoding

When we talk about Label Encoding, we’re generally speaking about turning our categories, or labels, into numbers. But how do we actually do this? Well, there are different ways, or techniques, that we can use. In this section, we’ll explore two of these: Ordinal Encoding and Label Encoding with Scikit-learn. Let’s start with Ordinal Encoding.

Ordinal Encoding

- Concept and Basics Imagine you’re in a race with your friends. After the race, you all line up in the order that you finished. You came first, your best friend came second, and so on. This is a type of ordinal information because the order matters. In the same way, some categories have an order too. For example, the categories ‘cold’, ‘warm’, and ‘hot’ have an order. ‘Hot’ is more than ‘warm’, and ‘warm’ is more than ‘cold’. This is where Ordinal Encoding comes in. Ordinal Encoding is a type of Label Encoding where the categories are converted into numbers, but unlike normal Label Encoding, the order of numbers matters. Each number represents a different category, and the size of the number tells us about the order of the categories.

- Mathematical FoundationThe maths behind Ordinal Encoding is pretty simple. It’s just like counting! Let’s say we have three categories: ‘cold’, ‘warm’, and ‘hot’. We could assign the number 1 to ‘cold’, the number 2 to ‘warm’, and the number 3 to ‘hot’.Now, our machine learning model will understand that ‘hot’ is more than ‘warm’, and ‘warm’ is more than ‘cold’. This is because 3 is more than 2, and 2 is more than 1. So, the maths behind Ordinal Encoding is just simple counting, but it’s very powerful!

- Use CasesOrdinal Encoding is perfect for any situation where we have categories that have an order. This might include things like surveys, where people can respond with ‘strongly disagree’, ‘disagree’, ‘neutral’, ‘agree’, or ‘strongly agree’. Or maybe we’re looking at sizes, like ‘small’, ‘medium’, and ‘large’. Any time there’s an order to our categories, Ordinal Encoding is a great tool to use.

- Advantages and DisadvantagesThe great thing about Ordinal Encoding is that it keeps the important order information in our categories. This can be very useful for our machine-learning models. But, Ordinal Encoding isn’t perfect. If we use it for categories that don’t have an order, it can be misleading. For example, if we tried to use Ordinal Encoding for cities, our model might think that ‘New York’ is more than ‘Tokyo’, just because ‘New York’ has a bigger number. This doesn’t make sense, right? So, we have to be careful when we use Ordinal Encoding.

Now that we’ve learned about Ordinal Encoding, let’s move on to Label Encoding with Scikit-learn.

Label Encoding with Scikit-learn

- Concept and BasicsScikit-learn is a super useful tool that we use in Python to do machine learning. It’s like a big toolbox that has all the tools we need, and one of these tools is Label Encoding. With Label Encoding in Scikit-learn, we don’t have to worry about the order of our categories. Each category gets a unique number, just like in our fruit example earlier. It’s like giving each of your friends a unique nickname. Each nickname only belongs to one friend, and there’s no order or meaning to these nicknames.

- Mathematical FoundationThe maths behind Label Encoding in Scikit-learn is pretty straightforward. Each category is given a unique number, starting from 0 and going up by 1 for each new category. So, if we have five categories, they’ll get the numbers 0, 1, 2, 3, and 4. But remember, these numbers are just labels. They don’t have any meaning or order. Just like with your friends’ nicknames, the number 4 isn’t more or less than the number 0. It’s just a different label.

- Use CasesLabel Encoding with Scikit-learn is great for any situation where we have categories that don’t have an order. This could be things like names of cities, types of fruits, or different car models. Any time there’s no order or hierarchy to our categories, Label Encoding with Scikit-learn is a fantastic tool to use.

- Advantages and DisadvantagesThe advantage of Label Encoding with Scikit-learn is that it’s simple and effective. It’s easy to use, and it does a great job of turning our categories into numbers that our machine-learning models can understand. But, like with Ordinal Encoding, we have to be careful. If we have categories that do have an order, using Label Encoding with Scikit-learn could be misleading. Our model might miss out on important order information. So, we need to think carefully about whether our categories have an order before we choose our Label Encoding technique.

So there you have it, two powerful techniques for Label Encoding! Remember, Ordinal Encoding is great for categories that have an order, like ‘cold’, ‘warm’, ‘hot’. And Label Encoding with Scikit-learn is perfect for categories that don’t have an order, like names of cities or types of fruits. By understanding these techniques, you’re well on your way to becoming a master of Label Encoding!

V. Label Encoding vs Other Techniques

Comparing different techniques of encoding might seem like comparing apples to oranges because they all do things a bit differently. But it’s important to understand their differences, so you can choose the right tool for your data. Here, we’ll compare Label Encoding to three other popular techniques: One-Hot Encoding, Scaling and Normalization, and Frequency Encoding.

Comparison with One-Hot Encoding

Theoretical differences

Imagine you have a bunch of friends, and you want to give them all a unique nickname. With Label Encoding, you might call one friend “1”, another friend “2”, and so on. But with One-Hot Encoding, it’s like giving each friend a different color hat. So, instead of calling your friends by numbers, you call them by the color of their hat. Each friend still has a unique label, but now it’s a color instead of a number. That’s the main difference between Label Encoding and One-Hot Encoding.

In other words, One-Hot Encoding turns each category into its own separate column in your data. If a data point belongs to a category, it gets a 1 in that category’s column. If it doesn’t belong, it gets a 0. Hence, each category is like a different color hat!

Practical differences

In practice, One-Hot Encoding can be very useful, but it can also create a lot of extra columns. For example, if you have a hundred different categories, One-Hot Encoding will create a hundred different columns! This can make your data a bit harder to manage.

Label Encoding, on the other hand, keeps everything in one column. This can be simpler and cleaner, but it also means that you lose some information about the differences between categories.

When to use which

So when should you use Label Encoding, and when should you use One-Hot Encoding? Well, it depends on your data. If your categories don’t have any order and you don’t have too many categories, One-Hot Encoding can be a good choice. But if you have a lot of categories, or if your categories have an order, Label Encoding might be better.

Comparison with Scaling and Normalization

Theoretical differences

Scaling and Normalization are a bit different from Label Encoding. They’re like taking a ruler to your data. Instead of giving your categories new names or color hats, you’re adjusting the sizes of your data points.

Scaling is about changing the range of your data. For example, if you’re measuring the height of your friends in inches, you might scale the data to measure it in feet instead.

Normalization, on the other hand, is about changing your data so it fits between 0 and 1. It’s like taking a super long ruler and shrinking it down so it fits in your pocket. The same ruler, just a different size.

Practical differences

In practice, Scaling and Normalization can be very useful when your data is all over the place. They can help bring everything together and make it easier to compare different data points.

Label Encoding doesn’t do this. It’s not about changing the size of your data, but changing the labels of your categories.

When to use which

So, when should you use Label Encoding and when should you use Scaling or Normalization? Well, if you’re dealing with numerical data that’s spread out a lot, Scaling or Normalization might be a good choice. But if you’re dealing with categories that don’t have any order, Label Encoding could be the way to go.

Comparison with Frequency Encoding

Theoretical differences

Frequency Encoding is another neat trick. It’s like keeping track of how often your friends visit. The more they visit, the higher their score. Instead of giving your categories new names or color hats, or changing their size, you’re counting how often they show up.

In other words, Frequency Encoding replaces each category with the count of how often that category appears in your data. So, if a category shows up a lot, it gets a high number. If it doesn’t show up very often, it gets a low number.

Practical differences

In practice, Frequency Encoding can be great for dealing with categories that show up a lot. If a certain category is very common in your data, Frequency Encoding can help your machine learning model take notice.

Label Encoding, on the other hand, doesn’t care about how often a category shows up. Each category gets a unique label, no matter how common or rare it is.

When to use which

So, when should you use Label Encoding, and when should you use Frequency Encoding? If you’re dealing with categories that show up with different frequencies, and you think those frequencies are important, Frequency Encoding might be a good choice. But if your categories don’t have any order and the frequency doesn’t matter, Label Encoding could be better.

Remember, there’s no one-size-fits-all answer to which technique is best. It all depends on your data and what you want to do with it. But by understanding the differences between these techniques, you can make an informed choice and pick the right tool for the job.

VI. Label Encoding in Action: Practical Implementation

This is the part where we put theory into practice. We will be working with an actual dataset and applying what we have learned so far about label encoding.

Choosing a Dataset

For our example, we’ll use the ‘Adult’ dataset from the UCI Machine Learning Repository. This dataset has been chosen because it contains a good mix of categorical and numerical features, allowing us to see the impact of label encoding on both types. It’s also a relatively large dataset (over 30,000 rows), which should provide a more realistic representation of real-world data. Furthermore, the prediction task associated with the ‘Adult’ dataset is a binary classification problem (predicting whether a person makes over 50K a year), which is a common type of problem in machine learning.

Here’s the Python code to load the dataset. Please note that we’re using the pandas library to handle our data, which is a very common tool in data science.

import pandas as pd

# Load the dataset

url = "https://archive.ics.uci.edu/ml/machine-learning-databases/adult/adult.data"

names = ['age', 'workclass', 'fnlwgt', 'education', 'education-num', 'marital-status', 'occupation', 'relationship', 'race', 'sex', 'capital-gain', 'capital-loss', 'hours-per-week', 'native-country', 'income']

df = pd.read_csv(url, names=names)

Data Exploration and Visualization

Before jumping into the encoding process, it’s always a good idea to take a look at your data. This can give you a sense of what you’re working with, and might reveal some interesting details.

# Display the first few rows of the data

df.head()

In this output, you will see a table displaying the first few rows of the data. Each column represents a different feature (or variable), and each row represents a different person.

Now, let’s get a basic summary of our data.

# Summary statistics for numerical columns

df.describe()

This will output a table with some summary statistics for each numerical column in our data. This includes things like the mean, standard deviation, minimum, and maximum.

# Frequency counts for categorical columns

for column in df.select_dtypes(include='object').columns:

print("\n" + column)

print(df[column].value_counts())

This code will output the frequency counts for each category in each categorical column. This can be useful to see how many different categories there are, and how often each one appears.

Data Preprocessing

In our dataset, there are missing values labeled as ‘?’. We have to handle these missing values before proceeding to encoding. Let’s replace these ‘?’ with np.NaN to make these missing values more manageable. We’ll use the replace() function in pandas for this.

import numpy as np

# Replace '?' with NaN

df = df.replace('?', np.NaN)

Now we have to decide what to do with these missing values. A common approach is to simply delete any rows that contain missing values. While this is not always the best approach, it’s a good starting point for our example.

# Drop rows with missing values

df = df.dropna()

# Check the new shape of our data

df.shape

This will output the new number of rows and columns in our data. You should see that the number of rows has decreased, indicating that some rows have been removed.

Label Encoding Process

Now we are ready to perform Label Encoding on our data.

Ordinal Encoding

Let’s start with the education column, which is a good candidate for ordinal encoding because the categories have a clear order (from ‘Preschool’ to ‘Doctorate’). Here is the Python code to do this using the OrdinalEncoder from the category_encoders library:

from category_encoders import OrdinalEncoder

# Create an ordinal encoder object

ordinal_enc = OrdinalEncoder(

cols=['education'],

mapping=[{'col':'education',

'mapping':{'Preschool':1, '1st-4th':2, '5th-6th':3, '7th-8th':4,

'9th':5, '10th':6, '11th':7, '12th':8, 'HS-grad':9,

'Some-college':10, 'Assoc-voc':11, 'Assoc-acdm':12,

'Bachelors':13, 'Masters':14, 'Prof-school':15, 'Doctorate':16}}]

)

# Apply the ordinal encoding to the education column

df = ordinal_enc.fit_transform(df)

This code first creates an ordinal encoder object, specifying the order of the categories in the ‘education’ column. It then applies this encoding to the data.

Label Encoding with Scikit-learn Python code explanation

Scikit-learn is a widely used Python library for machine learning, providing various utilities for model training, preprocessing, model selection, etc. One of its components is the preprocessing module, which provides several common utility functions and transformer classes to change raw feature vectors into a representation that is more suitable for the downstream estimators.

One of these classes is LabelEncoder, and we will use this to transform non-numerical labels (as long as they are hashable and comparable) to numerical labels. Here’s how to do it:

from sklearn.preprocessing import LabelEncoder

# Create a label (category) encoder object

le = LabelEncoder()

# Fit the encoder to the pandas column

le.fit(df['column'])

# View the labels (if you wish)

list(le.classes_)

# Apply the fitted encoder to the pandas column

df['column'] = le.transform(df['column'])

In the above code:

- We first import the LabelEncoder class from the sklearn.preprocessing module.

- We then instantiate the LabelEncoder object.

- Next, we use the fit method to fit the encoder object to the column we want to transform (in this case, ‘column’).

- Optionally, we can view the distinct labels using the classes_ attribute.

- Finally, we use the transform method to apply the label encoding on our column.

Visualizing the Encoded Data

Showcasing how data has transformed

It’s always a good practice to visualize the data after encoding, which helps to understand how data has been transformed. Let’s print the first few rows of the DataFrame before and after encoding:

# Before encoding

print(df.head())

# After encoding

print(df_encoded.head())

Another effective way to demonstrate the transformation is to plot the data. For example, if the dataset has a categorical target variable, you could create a bar plot showing the count of each category.

Comparing performance of models with and without Label Encoding

One of the primary reasons to perform label encoding is to improve the performance of machine learning models. Let’s test this by training a model with and without label encoding.

First, train a model without label encoding:

from sklearn.tree import DecisionTreeClassifier

from sklearn.model_selection import train_test_split

from sklearn.metrics import accuracy_score

# Split the data

X_train, X_test, y_train, y_test = train_test_split(df.drop('target', axis=1), df['target'], test_size=0.2, random_state=42)

# Create a Decision Tree Classifier and train

clf = DecisionTreeClassifier()

clf.fit(X_train, y_train)

# Predict the test set results

y_pred = clf.predict(X_test)

# Calculate accuracy

accuracy = accuracy_score(y_test, y_pred)

print("Accuracy: %.2f%%" % (accuracy * 100.0))

Next, repeat the process with label encoded data:

# Split the data

X_train, X_test, y_train, y_test = train_test_split(df_encoded.drop('target', axis=1), df_encoded['target'], test_size=0.2, random_state=42)

# Create a Decision Tree Classifier and train

clf = DecisionTreeClassifier()

clf.fit(X_train, y_train)

# Predict the test set results

y_pred = clf.predict(X_test)

# Calculate accuracy

accuracy = accuracy_score(y_test, y_pred)

print("Accuracy: %.2f%%" % (accuracy * 100.0))

By comparing the performance of the two models, we can evaluate the effect of label encoding on our machine learning model. Note, however, that the impact of label encoding can vary depending on the dataset and the model used.

In conclusion, label encoding is a handy technique to transform categorical data into numerical format, making it suitable for machine learning algorithms. Its effect on model performance may vary, and it’s essential to be mindful of potential pitfalls, like introducing an unwanted ordinal relationship.

PLAYGROUND:

VII. Applications of Label Encoding in Real World

Label Encoding is a popular technique in the field of data science and machine learning. It allows us to transform text data into numbers which our predictive models can understand better. Now, let’s take a look at some real world examples to see how it is used in different scenarios.

1. Medical Diagnosis

Imagine you are a data scientist working in a hospital. The doctors have collected a lot of information about their patients, like symptoms, medical history, and final diagnosis. But here’s the thing – all this information is in text form.

To make this data useful for machine learning, we can use Label Encoding. For example, the symptoms can be labeled from 1 to N (where N is the total number of symptoms). Similarly, the final diagnosis can be labeled in the same way.

With this encoded data, you can now build a machine learning model that helps doctors predict diseases based on patient symptoms!

2. Customer Segmentation

Let’s say you work in a retail company. The company wants to understand its customers better to offer them more personalized services.

The company has data on customer buying behavior, preferences, and demographics. But much of this data is in text form, like ‘gender’, ‘product category’, ‘payment method’, etc.

Here, Label Encoding can be applied to transform these text categories into numbers. For example, ‘gender’ can be encoded as ‘1’ for ‘male’ and ‘2’ for ‘female’. ‘Product category’ can be encoded as ‘1’ for ‘clothing’, ‘2’ for ‘electronics’, and so on.

Once the data is encoded, you can use machine learning algorithms to segment the customers into different groups based on their buying behavior and preferences. This will help the company offer more tailored services to its customers!

3. Sentiment Analysis

Imagine you are a data analyst in a company that wants to understand how people feel about its products by analyzing social media posts. The company has collected a lot of data from Twitter, including the text of the tweets and the emojis used.

Since machine learning algorithms can’t work with text data directly, you can use Label Encoding to convert the emojis into numbers. For instance, a happy face emoji ‘😃’ could be encoded as ‘1’, a sad face ‘😞’ as ‘2’, and so on.

Once the data is encoded, you can build a machine learning model that can analyze the sentiment behind the tweets and give the company a better understanding of how people feel about their products.

4. Weather Prediction

Weather prediction is another application of label encoding. Meteorologists have a lot of weather data like ‘weather type’ (sunny, cloudy, rainy, etc.), ‘wind direction’ (north, south, east, west), etc. All these can be transformed using Label Encoding.

This encoded data can be fed to a machine learning model to predict future weather conditions. Such predictions can be very useful in planning outdoor activities, farming decisions, and even disaster management.

These are just a few examples of how Label Encoding is used in the real world. The technique is versatile and can be applied in many other fields such as finance, transportation, and even sports analytics! The key is to remember when and how to apply it correctly, which you now know from our previous sections.

So, with a little bit of creativity and understanding of your data, you can apply Label Encoding to solve many interesting problems. Happy encoding!

VIII. Cautions and Best Practices

While Label Encoding is a powerful tool in machine learning, it is important to use it wisely. Here are some important cautions and best practices to keep in mind:

When to Use Label Encoding

- Ordinal Categories: Use Label Encoding when your categorical variable is ordinal. That is, the categories have a clear ranking or order amongst them. For example, ratings of a movie (like bad, average, good, excellent) are ordinal. The reason for this is that Label Encoding introduces an order or priority in the categories which aligns well with ordinal data.

- Binary Categories: If your categorical variable has only two categories (like yes/no, true/false), Label Encoding is a good choice. It will simply turn the categories into 0 and 1, which is very easy to work with.

- Tree-Based Models: Label Encoding can be used when the machine learning model is tree-based, like decision trees or random forests. These models can handle the ‘rank’ information well even if the categorical data is not ordinal.

When Not to Use Label Encoding

- Nominal Categories: If your categorical variable is nominal (i.e., the categories do not have any order or priority), then it may not be a good idea to use Label Encoding. This is because it will introduce an artificial order which can confuse the machine learning model. For example, categories like ‘red’, ‘blue’, ‘green’ do not have a clear order amongst them.

- Non-Tree-Based Models: Label Encoding may not work well with non-tree-based models like linear regression, logistic regression, or neural networks. These models might misunderstand the encoded categories as having an order, leading to less accurate results.

Choosing the Right Method of Label Encoding

Always remember, there is no one-size-fits-all method in feature engineering. You need to consider the nature of your data and the machine learning model you are using. For example, if you have ordinal data, Ordinal Encoding is a good choice. For nominal data, you may want to consider One-Hot Encoding or Frequency Encoding.

D. Implications of Label Encoding on Machine Learning Models

Label Encoding can significantly impact the performance of your machine learning models. Properly encoded data can improve model accuracy. However, wrongly encoded data can lead to poor results. This is why it’s important to choose the right encoding method for your data.

Tips for Effective Label Encoding

- Understand Your Data: Before you start encoding, take time to understand your data. Is it ordinal or nominal? How many categories are there? Are there any missing values or outliers? This will help you choose the most suitable encoding method.

- Handle Missing Values: Always handle missing values in your data before applying Label Encoding. Missing values can be filled with a new category, the most frequent category, or using a prediction model.

- Watch Out for High Cardinality: If your categorical variable has too many categories (high cardinality), Label Encoding may not be the best choice. It can lead to high values that might affect your model performance. In such cases, consider using other encoding methods like Frequency Encoding or Target Encoding.

- Test Different Methods: Try different encoding methods and see which one gives you the best results. You can use cross-validation to measure the performance of your model with different encoding methods.

Remember, the goal of Label Encoding (and any feature engineering method) is to make the data easier to understand for the machine learning models. So, take your time, experiment with different methods, and find what works best for your data. Happy encoding!

IX. Summary and Conclusion

Recap of key points

In this article, we took a deep dive into the world of Label Encoding – a powerful technique for transforming text categories into numbers. We started by understanding what Label Encoding is and why it is important in data science and machine learning. We learned how Label Encoding assigns each category a unique number, making the data understandable to machine learning models.

We went into the mathematical foundations of Label Encoding, understanding how it can impact the dimensionality of our data. We also explored different techniques for Label Encoding, including Ordinal Encoding and using the popular Scikit-learn library.

Next, we compared Label Encoding with other techniques like One-Hot Encoding, Scaling and Normalization, and Frequency Encoding. We discovered the theoretical and practical differences between these methods and learned when to use which.

We then put our knowledge into action by implementing Label Encoding on a real-world dataset. We observed how Label Encoding transformed the data and compared the performance of models with and without it.

We highlighted a number of real-world applications of Label Encoding, showing its versatility and wide usage across different fields. Finally, we shared some cautions and best practices when using Label Encoding, helping you make the most of this technique in your future work.

Closing thoughts

While Label Encoding is a potent tool in your feature engineering toolbox, it’s important to remember that it’s not always the right tool for every job. Label Encoding is most suitable for ordinal data or for binary categories, and it tends to work well with tree-based models. However, for nominal categories and non-tree-based models, other encoding techniques might be more appropriate.

It’s also crucial to handle missing values before applying Label Encoding, and to be cautious when dealing with high cardinality categories. Remember, no one encoding method is a magic bullet. It’s always worth trying different methods and seeing which works best with your specific data and model.

Finally, don’t forget the ultimate goal of all this: to make your data easier to understand for your machine learning models. So, keep experimenting, keep learning, and most importantly, have fun along the way!